20.09.24

Artificial Intelligence (AI) is revolutionizing various sectors by enhancing efficiency, enabling

advanced data analysis, and driving innovation. From automating routine tasks to offering

insights through deep learning, AI's advantages are significant and transformative. However,

these benefits come with complex regulatory challenges that need to be addressed to ensure

the safe and ethical use of AI technologies.

The EU AI Act introduces a regulatory framework designed to mitigate risks associated with AI,

focusing on transparency, data governance, safety, and robustness, among other things. This

regulation categorizes AI systems based on their level of risk and their technological features,

specifically addressing high-risk applications that could significantly impact individuals and

society.

While the EU AI Act requires the introduction of AI-specific compliance measures tailored to the

unique risks posed by AI systems, these do not need to form an entirely new governance

framework. Instead, these measures can be integrated into the organization's existing

governance structures, specifically within the second line of defense (2LoD).

By leveraging existing processes and functions, organizations can align AI governance with

established risk management, compliance, and operational practices. This approach ensures a

seamless transition to new compliance measures without duplicating efforts or creating

redundant systems. Incorporating these new compliance measures in a manner that builds on

existing governance structures makes the process more efficient and effective, enabling

organizations to meet both current and future regulatory requirements for AI technologies.

In the following, we highlight some important governance objectives that different departments

could pursue in implementing the EU AI Act requirements, starting with information security.

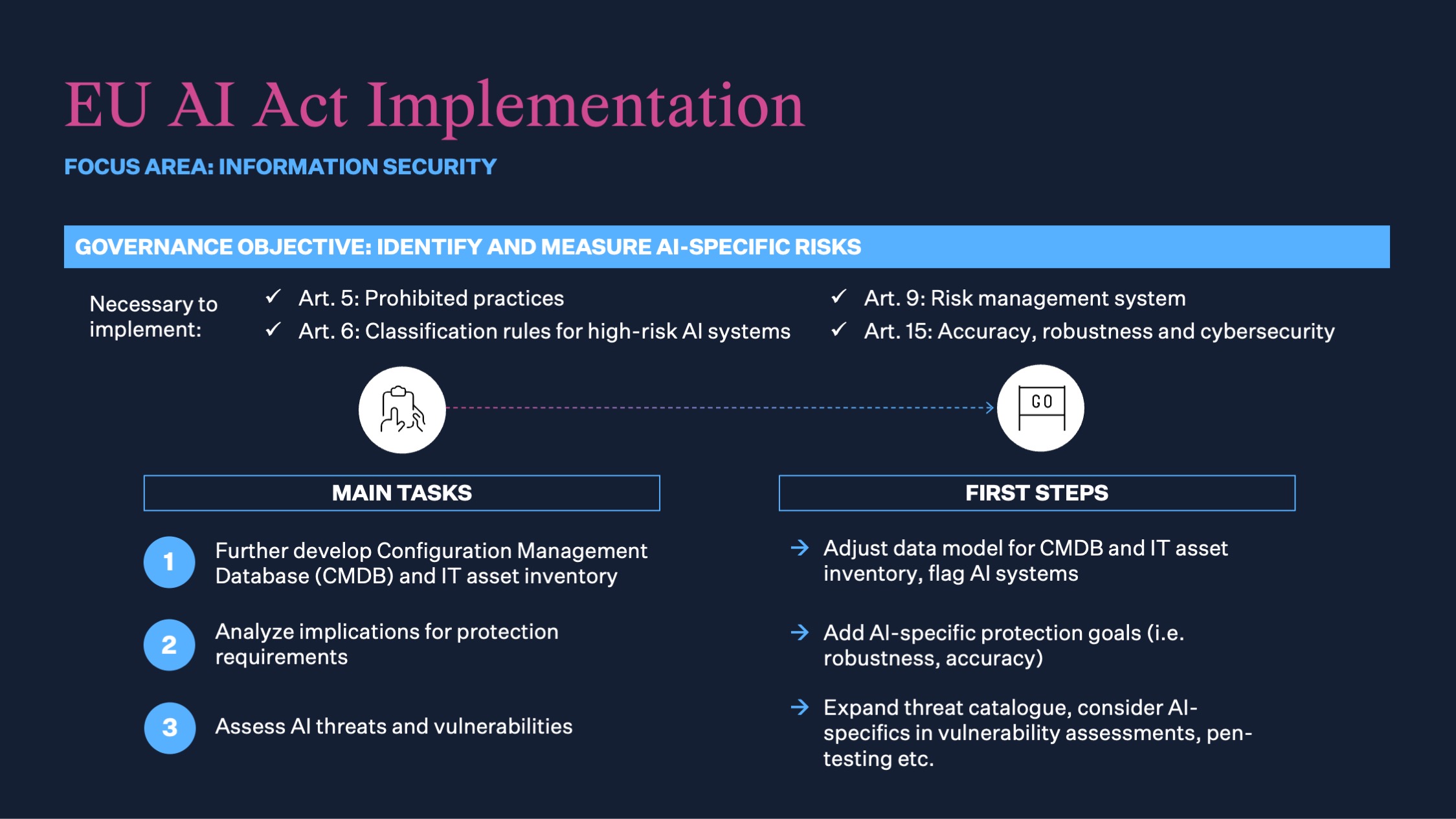

To ensure organizations successfully integrate AI governance into their information security

frameworks, a methodical approach to identifying and measuring AI-specific risks is

necessary. This begins with updating their Configuration Management Database (CMDB) and IT

asset inventory to include specific categories for AI systems. By doing so, organizations can

ensure comprehensive tracking and management of AI systems. Additionally, protection

requirements such as robustness, accuracy, and data governance must be carefully analyzed

and integrated into the existing risk management protocols. Addressing AI-specific risks requires

organizations to expand their threat catalogue as well to encompass these new threats,

conducting thorough vulnerability assessments and penetration testing.

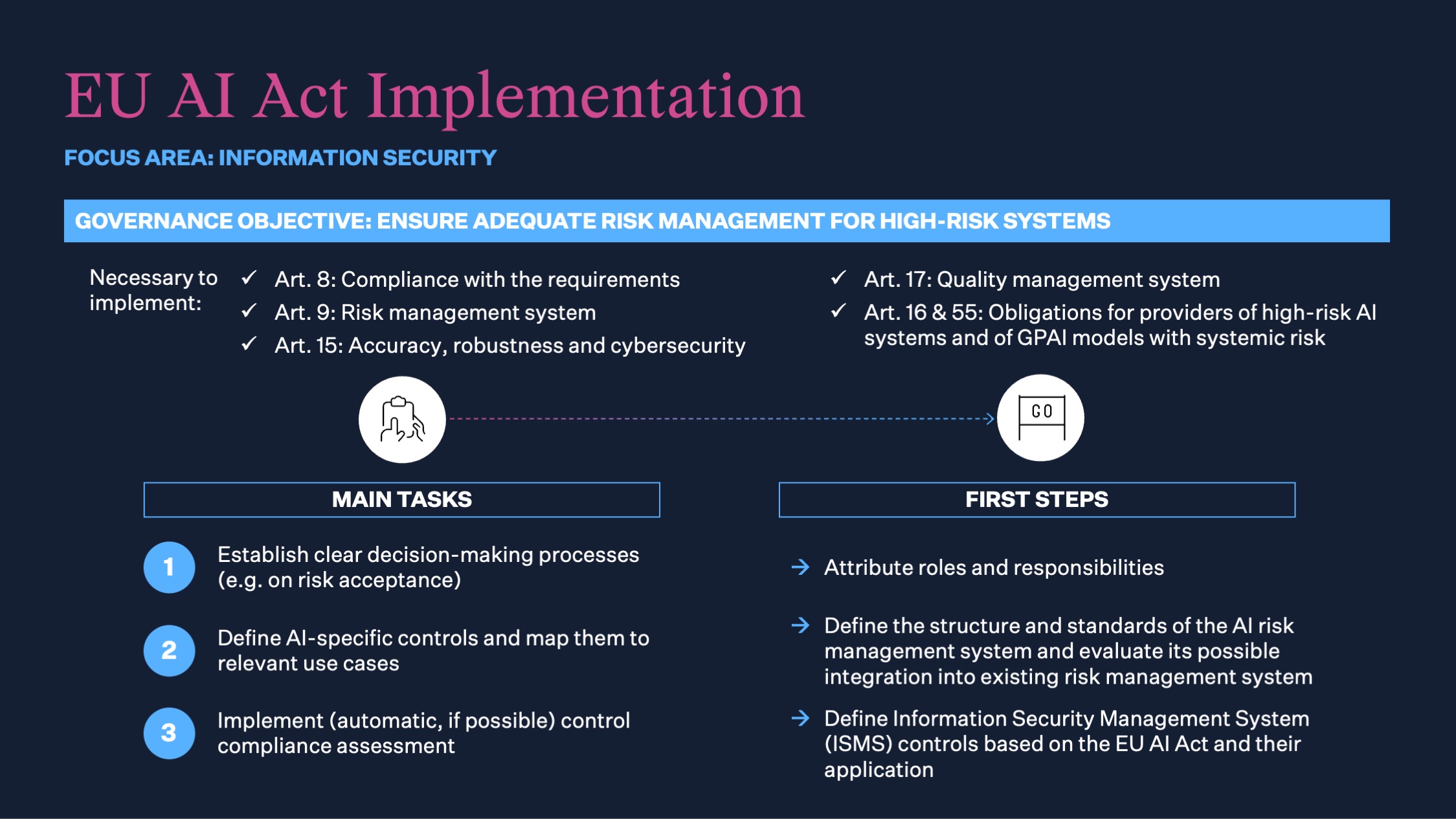

Beyond identifying and measuring risks, it is crucial to implement robust risk management for high-risk AI systems. This process starts with establishing clear decision-making processes concerning risk acceptance and other critical aspects of AI risk management. Organizations must define AI-specific controls and map them to relevant use cases within their operations, ensuring all necessary measures are in place to manage the unique risks posed by AI technologies. Regular compliance assessments are essential to monitor ongoing adherence to both established controls and regulatory requirements. Ideally, these assessments should be automated to enhance efficiency and accuracy.

Another business function that will be highly impacted by the EU AI Act is outsourcing and

vendor management. Effective governance in this domain is vital for ensuring that third-party

relationships are managed with the same rigor and compliance as internal processes, especially

in light of the EU AI Act’s requirements.

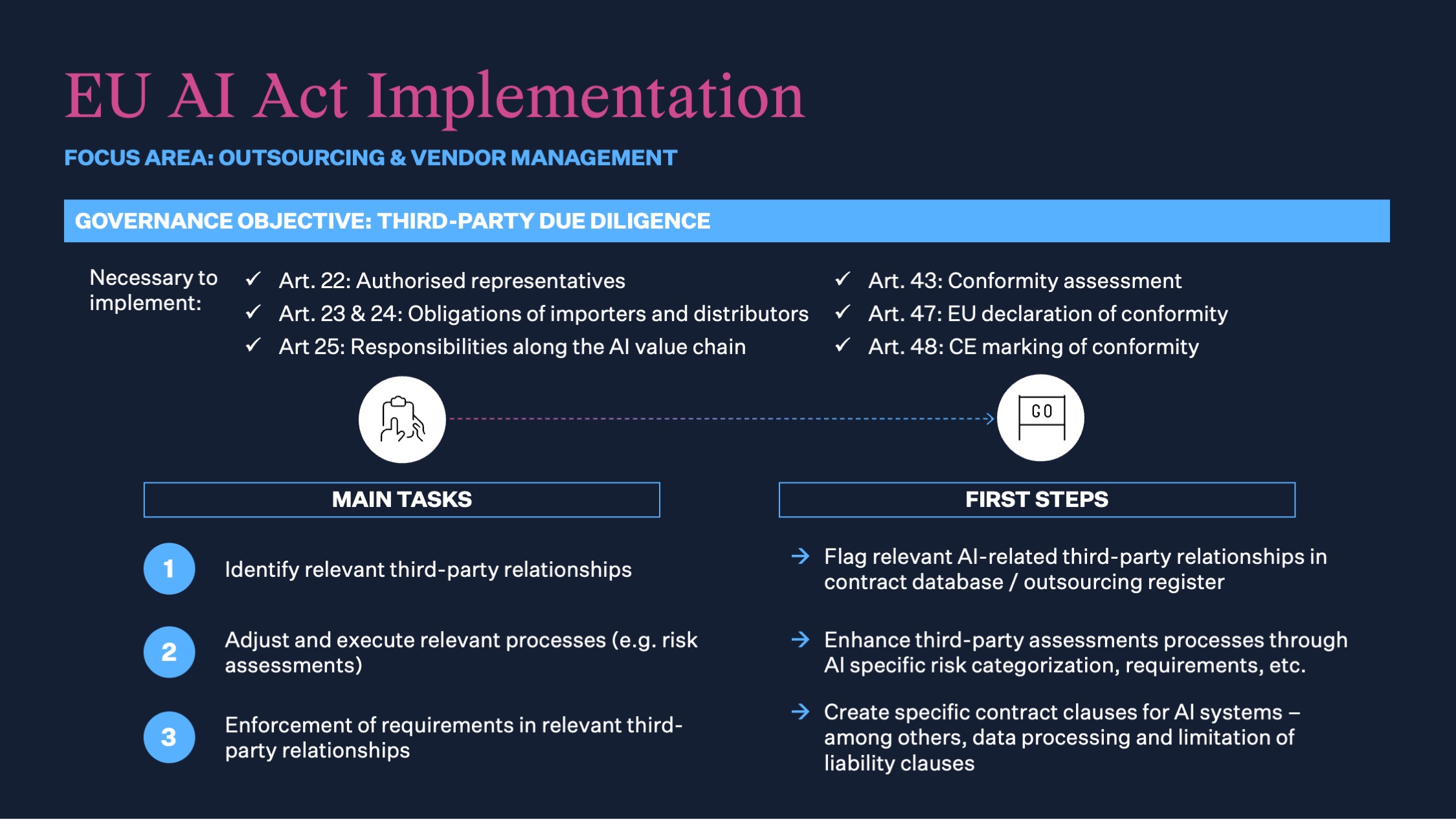

A key governance objective in this area is conducting thorough third-party due diligence. This

involves identifying relevant third-party relationships and ensuring they are flagged appropriately

in the organization’s contract database or outsourcing register. Once these relationships are

identified, existing assessment processes need to be enhanced to incorporate AI-specific risk

categorization and requirements. Moreover, it is essential to create specific contract clauses for

AI systems. These clauses should cover aspects such as data processing, security

requirements, and limitation of liability.

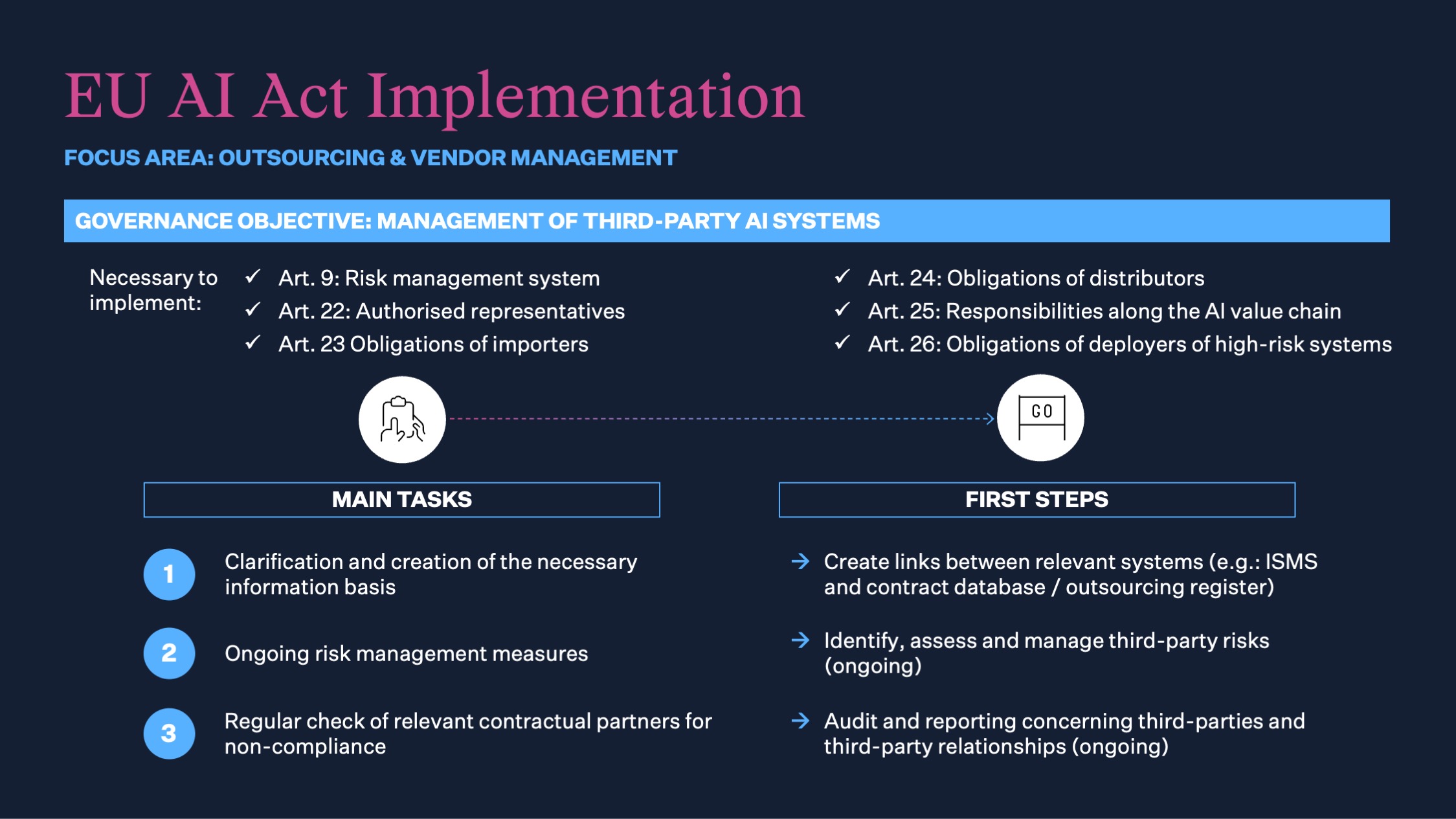

In addition to third-party due diligence, managing third-party AI systems is another critical governance objective. This requires establishing links between various systems, such as the ISMS and the contract database or outsourcing register to ensure that information is consistently updated and accessible to the relevant internal stakeholders. Moreover, identifying, assessing, and managing third-party AI risks must be a continuous process to adapt to new threats. Regular checks and audits of relevant contractual partners are necessary to ensure compliance and identify any areas of non-compliance promptly

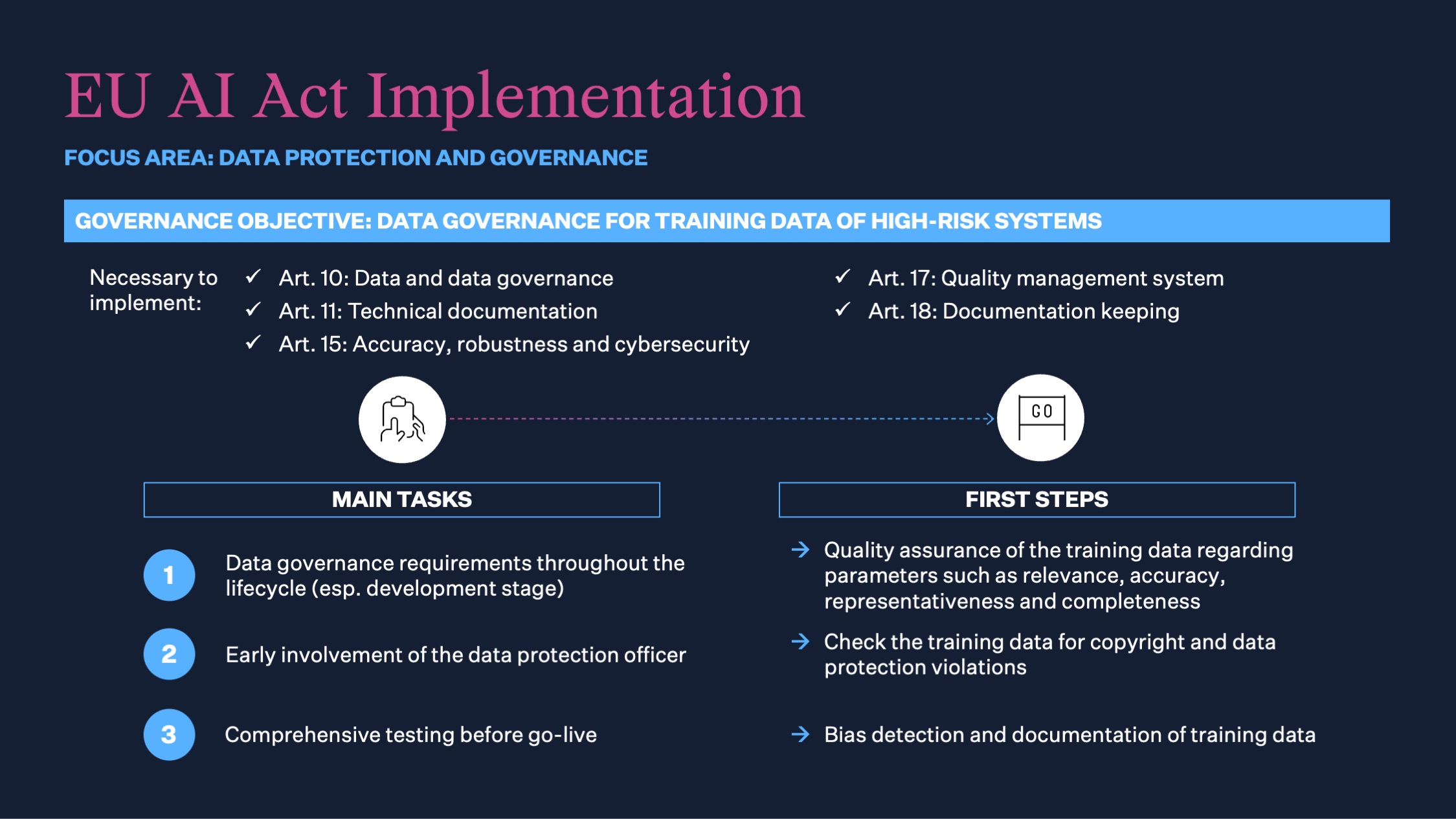

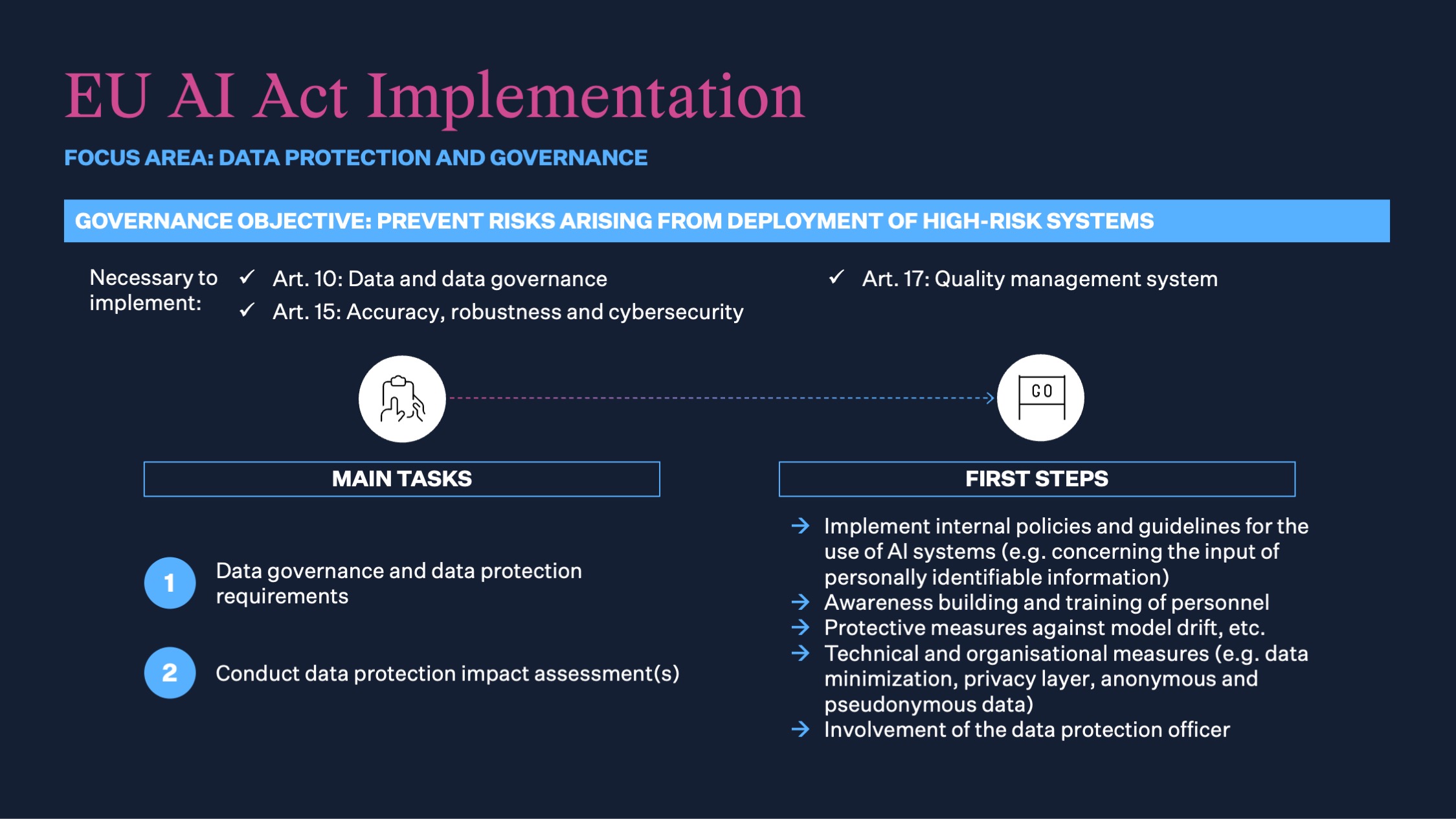

Finally, data protection and governance departments should ensure robust data management

practices that comply with the EU AI Act, especially when dealing with high-risk AI systems. Two

main governance objectives stand out in this area: data governance for training data of high-risk

systems and preventing risks arising from the deployment of high-risk systems.

For data governance of training data, it is essential to implement stringent requirements

throughout the AI system's lifecycle, particularly during the development stage. This

necessitates the early involvement of data protection officers who play a crucial role in

overseeing and guiding proper data handling practices. Quality assurance processes must verify

the relevance, accuracy, representativeness, and completeness of the training data.

Additionally, checking for copyright compliance and data protection violations helps mitigate

legal risks. Detecting and documenting biases in the training data is also essential for

maintaining the fairness and performance of AI systems.

To prevent risks arising from the deployment of high-risk AI systems, data protection departments should develop and enforce policies governing AI system use, particularly around the input of personally identifiable information. Building awareness and providing training for staff is critical to ensure everyone understands the relevant policies and procedures. Implementing protective measures against issues such as model drift and enhancing data security through technical and organizational safeguards further strengthens risk management. Regular data protection impact assessments, alongside the ongoing involvement of data protection officers, ensure that high-risk AI systems are deployed and managed in compliance with the EU AI Act.

In conclusion, adapting to the EU AI Act involves addressing AI-specific risks across multiple areas within an organization. While we focused on information security, third-party management, and data protection and governance, it is important to recognize that other areas also require attention. By integrating AI governance into existing processes and frameworks, organizations can meet regulatory requirements, ensure ethical AI use, and foster a secure operational environment. This approach prepares organizations for both current and future challenges in the evolving landscape of AI regulation and technology.